Key points

- Vera C Rubin Observatory in Chile releases first celestial image

- Home to the world’s most powerful digital camera, it will film the night sky for a decade

- Detected over 2,000 asteroids in 10 hours — a record-breaking feat

- Aims to uncover dark matter, map the Milky Way and spot potential threats to Earth

- UK plays key role in data analysis and processing

Powerful new eye on the universe

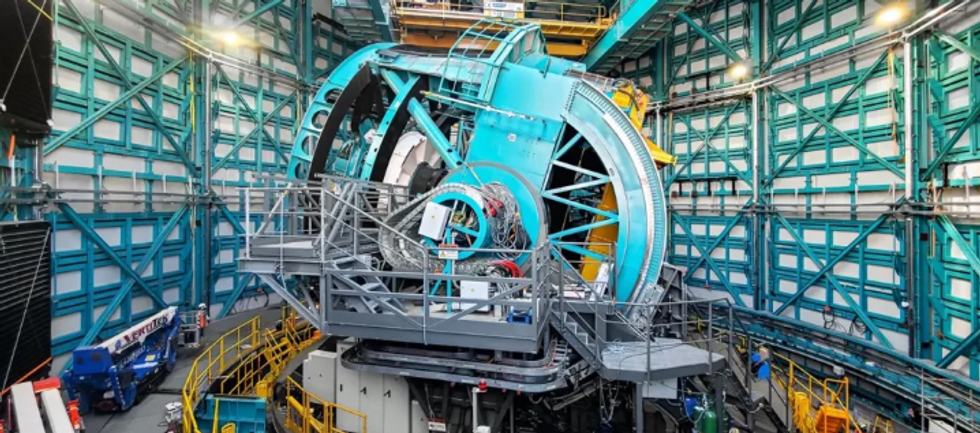

The Vera C Rubin Observatory, located atop Cerro Pachón in the Chilean Andes, has released its first image, a vibrant snapshot of a star-forming region 9,000 light years from Earth. The observatory, home to the world’s most powerful digital camera, promises to transform how we observe and understand the universe.

Its first observations signal the start of a decade-long survey known as the Legacy Survey of Space and Time, which will repeatedly capture wide-field images of the southern night sky.

Unprecedented detection power

In just 10 hours of observation, the telescope identified 2,104 previously unknown asteroids and seven near-Earth objects, a rate that surpasses what most global surveys find in an entire year. This capacity highlights the observatory’s potential to detect celestial objects that may otherwise go unnoticed, including potentially hazardous asteroids.

One of the telescope's strengths is its consistency. By imaging the same areas every few nights, it can identify subtle changes and transient events in the cosmos, such as supernovae or asteroid movements, and instantly alert scientists worldwide.

A feat of engineering

The telescope’s design includes a unique three-mirror system that captures and focuses light with remarkable clarity. Light enters via the primary mirror (8.4 metres), reflects onto a secondary mirror (3.4 metres), then onto a tertiary mirror (4.8 metres), before reaching the camera. Each surface must remain spotless, as even a speck of dust could distort the data.

The camera itself is an engineering marvel. Measuring 1.65 by 3 metres and weighing 2,800 kilograms, it boasts 3,200 megapixels, 67 times more than the iPhone 16 Pro. A single image would need 400 Ultra HD TV screens to display in full. It captures one image roughly every 40 seconds for up to 12 hours a night.

This design allows the observatory to see objects that are extremely distant, and thus from much earlier periods in the universe’s history. As commissioning scientist Elana Urbach explained, this is key to “understanding the history of the Universe”.

Protecting the dark sky

The observatory’s remote location was chosen for its high altitude, dry air, and minimal light pollution. Maintaining complete darkness is critical; even the use of full-beam headlights is restricted on the access road. Inside, engineers are tasked with eliminating any stray light sources, such as rogue LEDs, to protect the telescope’s sensitivity to faint starlight.

UK collaboration and scientific goals

The UK is a key partner in the project, with several institutions involved in developing data processing centres that will manage the telescope’s enormous data flow, expected to reach around 10 million alerts per night.

British astronomers will use the telescope to address fundamental questions about the universe. Professor Alis Deason at Durham University says the Rubin data could push the known boundaries of the Milky Way. Currently, scientists can observe stars up to 163,000 light years away, but the new telescope could extend that reach to 1.2 million light years.

She also hopes to examine the Milky Way’s stellar halo, a faint region made up of remnants from dead stars and galaxies, as well as elusive satellite galaxies that orbit our own.

Looking for Planet Nine

Among the more intriguing missions is the search for the mysterious Planet Nine. If the proposed ninth planet exists, it is thought to lie up to 700 times the distance from Earth to the Sun — too far for most telescopes to detect. Scientists believe the Vera Rubin Observatory may be powerful enough to confirm or refute its existence within its first year.

A new era in astronomy

Professor Catherine Heymans, Astronomer Royal for Scotland, described the release of the first image as the culmination of a 25-year journey. “For decades we wanted to build this phenomenal facility and to do this type of survey,” she said. “It’s a once-in-a-generation moment.”

With its unmatched ability to capture deep-sky imagery and monitor celestial motion over time, the Vera Rubin Observatory is expected to reshape our understanding of the universe, from dark matter to planetary defence.

“It’s going to be the largest data set we’ve ever had to look at our galaxy with,” says Prof Deason. “It will fuel what we do for many, many years.”