A NEW immersive play performed in a Bradford car park will explore the significance of car culture within British Asian communities and how it acts as an escape from racism.

Performed in Oastler Market car park overlooking the city centre, Peaceophobia examines rising Islamophobia from the perspective of three young British Pakistani men from a modified car club.

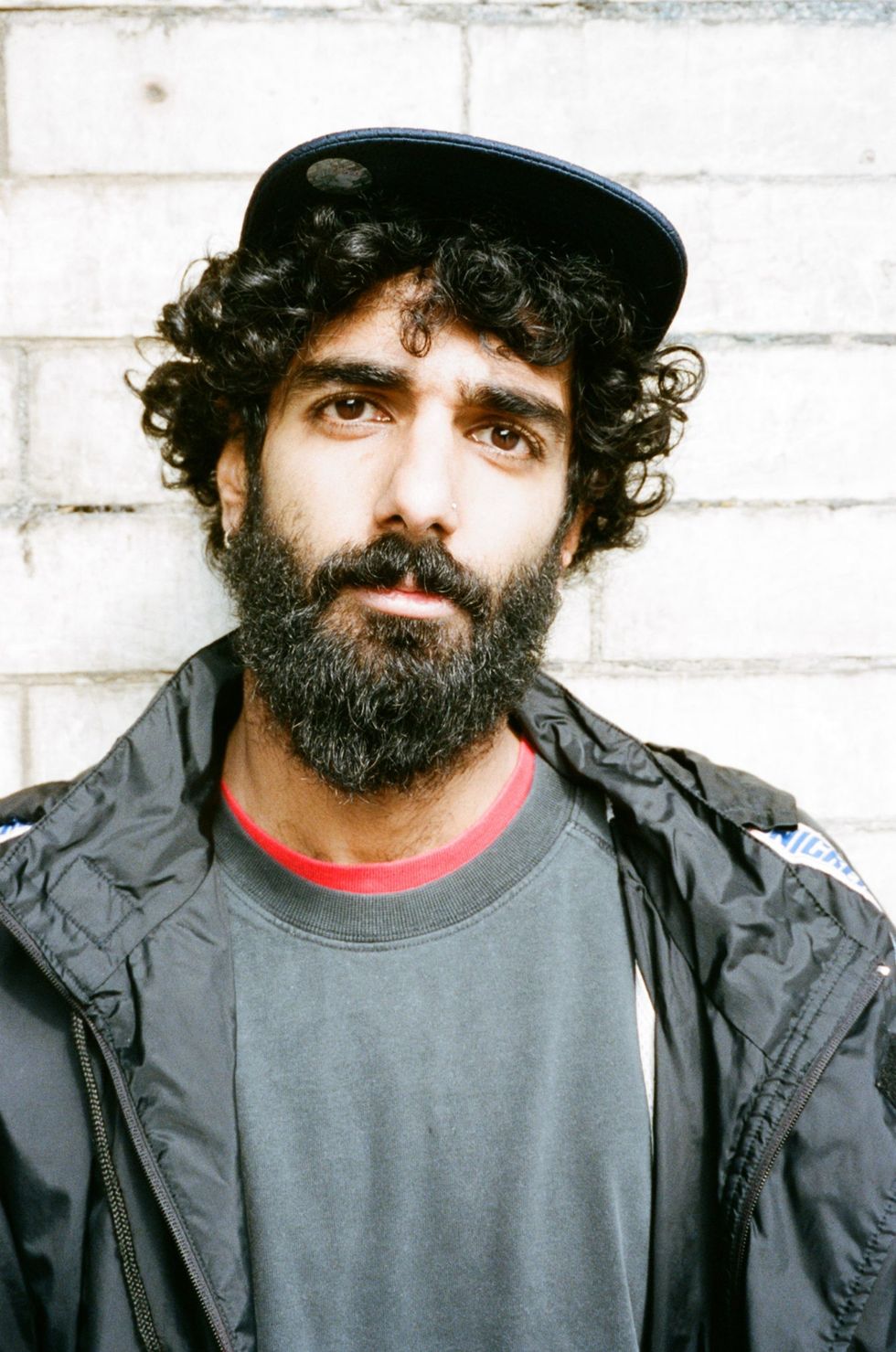

Co-written by acclaimed playwright Zia Ahmed, the story shows how the men use their love of cars and their faith as a sanctuary from the world around them. Prior to writing the script, Ahmed spent time with the performers to learn about their passion for car culture. He quickly witnessed the extent of the love they have for their vehicles.

“I think a lot of that (love) is to do with the agency, it is their space, and it is something they own,” he told Eastern Eye. “They can put their personality onto it and it’s not something that they need to suppress – they can go as loud or as big as they want.”

Modifying their vehicles is a creative outlet and can act as a source of escapism, Ahmed added. “There is a lot of joy and pride that comes into maintaining the cars,” he said.

Although the play centres upon the prominent car culture within Bradford’s Asian community, London-based writer Ahmed does not have a huge amount of experience with motors. “I can’t actually drive,” he laughed.

The production was co-created by members of Speakers’ Corner, a political, creative collective of women and girls. Although Speakers’ Corner is made up of females, the show primarily focuses upon the lives of Muslim men.

While speaking to journalists to promote the show, Ahmed admitted the creators had been questioned several times on why the play centred on Muslim men.

“We’ve been asked that question a lot and it’s like, who else has been talking about (the men)?” he said. “The narrative for a young Muslim man in this country has always been around criminality and poverty and while those things will be touched upon, the focus of the show is the cars and faith in a positive way, not just solely about Islamophobia.

"As much as Islamophobia impacts in a negative way, there’s still the love for the faith that keeps you going.”

The show is offering a platform to the men, Ahmed added, a place to share their stories and experiences.

Speakers’ Corner Iram Rehman said the project began as a campaign to promote that Islam comes from peace. “We are part of a movement of young people using their voices to make a positive change and promote peace instead of being silenced,” Rehman explained.

The show’s title is a spin on Islamophobia, Ahmed noted. He said: “It’s about three guys trying to find peace through their religion – and how can you have a phobia of peace?”

The play is performed by three men – Mohammad Ali Yunis, Casper Ahmed, and Sohail Hussain. The trio all keep their real names for the play, a conscious decision by the creators.

“They are playing versions of themselves and (the play) has come from workshopping with them and listening to their stories,” Ahmed explained. “Of course, there’s a performance element but (Peaceophobia) is unashamedly about these three Pakistani-Muslim men from Bradford.”

The project was conceived before the outbreak of the Covid-19 pandemic last year and Ahmed considered whether it would still be timely. However, he noted Muslims are still being profiled and discriminated against because of their religion.

“Islamophobia isn’t gone, it didn’t stop during the pandemic,” he said. “But the show is not just about that – it’s also about the happier, hopeful side of faith and the love of the cars.

"Whatever preconceptions that you might have about the cars, seeing the detail and the care and the love that they have (for their vehicles), I think anyone can relate to that.”

Peaceophobia plays at Oastler Market car park, Bradford, from Friday (10) to next Saturday (18); then at Contact Manchester from September 29 to October 2.