ChatGPT-4o was retired on February 13 after launching on May 13, 2024.

Some users said they formed deep personal connections with the model.

Experts continue to debate safety risks and emotional impact.

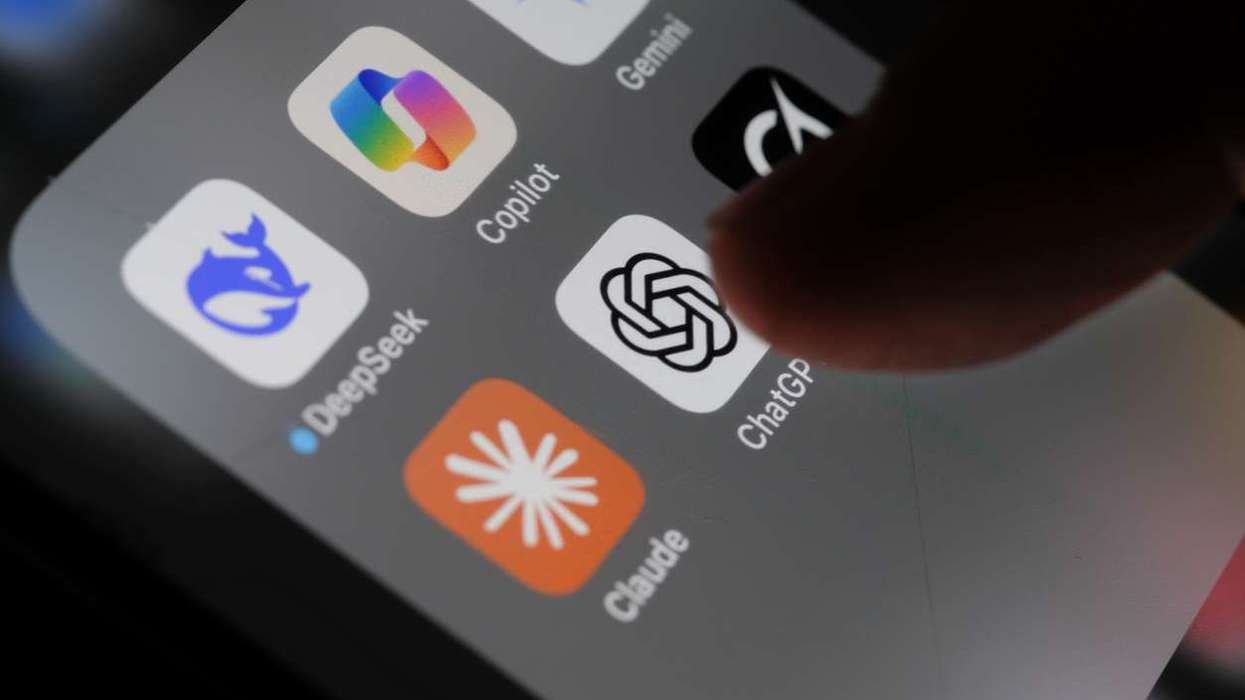

The retirement of ChatGPT-4o by OpenAI has sparked strong reactions across its user base, with many saying the move highlights the growing role of AI companions in people’s daily lives. The older model, launched on May 13, 2024, was removed on February 13, prompting conversations about emotional reliance, safety concerns and the evolving responsibilities of AI developers.

For some users, the change felt deeply personal. One user, who goes by Rae, reportedly began using the model after a divorce, initially asking about diet and skincare before conversations became a daily routine. Over time, she says the interaction developed into what felt like a genuine connection.

Rae described how their exchanges evolved, saying she spent more time chatting and eventually gave the bot a name, as quoted in a news report. She said they built a shared narrative over months, including an online wedding within their conversations, which she described as feeling increasingly real.

When a chatbot feels personal

As OpenAI prepared to retire the model, some users recorded their final chats. Rae said she saved her last exchanges before switching to an alternative platform she had created to continue the experience. She noted the new version felt different, comparing it to someone returning from a long trip, as quoted in a news report.

Others also spoke about the support they felt they received. Some users said the chatbot offered encouragement with everyday tasks or helped them take small social steps. Ursie Hart reportedly said she began using an AI companion while struggling with ADHD and found it helped with routines and emotional support, describing it as “being a friend” during difficult periods, as quoted in a news report.

Hart added that she had gathered testimonies from hundreds of people who used the model as a companion or accessibility tool and expressed concern for those losing that specific interaction, as quoted in a news report.

Support, criticism and safety concerns

The same qualities that drew users closer have also raised concerns among researchers and observers, who have questioned whether highly agreeable responses could reinforce unhealthy thinking in some cases. Reports note the model has been named in multiple legal cases in the US, including lawsuits alleging harmful outcomes.

OpenAI described incidents linked to the model as “incredibly heartbreaking situations,” reportedly adding that its thoughts were with those affected. The company said it introduced versions with stricter safety features and allowed paying users to keep access temporarily while improvements were made.

It also said it continues to refine training to better recognise distress and guide users towards real world support, reportedly working with clinicians and experts.

Some users have since formed support groups to discuss the change. Etienne Brisson, who founded The Human Line Project, reportedly said he expected some people to grieve the loss even as he hoped the removal might reduce harm.

OpenAI reported that about 0.1 per cent of customers used ChatGPT-4o daily. Based on roughly 100 million weekly users, that would equal around 100,000 daily users. A petition opposing the shutdown gathered more than 20,000 signatures.

Online communities have seen messages from people struggling with the change, while others have tried to recreate the experience on newer systems or migrate saved chats. The episode has added to a wider debate about how technology companies balance engaging conversational tools with safeguards as AI becomes more embedded in everyday life.